CYBER SECURITY - IS IT TIME TO RECONSIDER THE IT LANDSCAPE AND THE DESIGN OF INDUSTRIAL COMPUTERISED EQUIPMENT?

For the last couple of years, ransomware has been representing a serious and poorly mastered threat for both firms as well as for private people. The recent issues with "WannaCry" showed how real such a menace could be, even if this first global attack1 had a limited impact because of some malware "design failures". We should be aware, though, that the situation may become worse.

Beyond the particular case of "Wanna- Cry", the current situation of the industrial IT landscape - especially for the computerised equipment like laboratory, manufacturing, and infrastructure equipment - should be seriously reconsidered. The deliberate intention to destroy systems, infrastructure, data, and companies shall be acknowledged rather than underestimated or ignored.

Taking a look at discussion forums, the general trend of the various comments related to "WannaCry" is that such attacks are only possible because of "system administrator laziness and the company stinginess avoiding investment in software updates". Even if, in some cases, this statement could be at least partially correct, the situation is far more complicated than these initial statements, in particular for the regulated pharmaceutical industry and the GxP environment.

Update management

"WannaCry" impacted at first "obsolete" and non-updated operating systems2. And the main reason for obsolete operating systems still being in use is the complexity of the computer systems controlling manufacturing and analytical processes and the limitation of software updates for those systems. It is not just the requalification/revalidation effort, but a further significant factor is the multiple compatibility issues between the application and operating system when updates are applied.

Since the early 1990s, Microsoft has been promoting the use of Windows® not only for office purpose but as being an appropriate controlling platform for industrial and process equipment. Various frameworks and services were made available for supporting the implementation of equipment control features.

Implemented natively since Windows® NT4, DCOM - Distributed Component Object Model - has been providing a communication framework supporting application server infrastructure. Such a framework supports, amongst others, the implementation of OPC - OLE3 for Process Control - used for process automation. However, the "Lovsan" worm contamination during Summer 2003 already showed the vulnerability of process control systems relying on DCOM. The deployment of an operating system patch simply closing communication ports to limit the propagation of "Lovsan" worm caused malfunction of automation systems since these ports are also used by DCOM.

Recommendation

Vienna, Austria6 May 2025

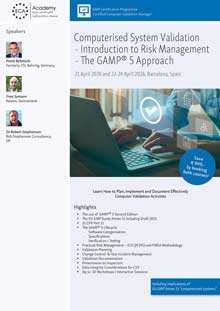

Computerised System Validation: Introduction to Risk Management

The careless application of Windows® XP Service Pack 2 on PCs controlling laboratory equipment caused multiple serious operation failures in the laboratory environment. The industry and its equipment suppliers had to learn the hard way that uncontrolled changes such as adding operating system patches and service packs could make process control systems and laboratory equipment inoperable.

Such cases caused equipment suppliers to become very restrictive in the way of supporting operating system updates, unfortunately including the virtualisation of applications controlling equipment. Additionally, the legitimate wish to limit support efforts and costs as well as to improve business figures causes that, too often, expensive equipment is not really supported on software level after a few years. Although hardware and mechanics are still supported - including spare parts - the operating system and control software updates are significantly limited. The industry faces the situation that expensive equipment actually representing an investment for one to two decades can be operated after few years only with an obsolete software configuration.

The poor maturity of Windows® operating systems since the release of Vista®, and the frequently changing operating system architecture and interface design cause significant development and update costs for each new operating system for the suppliers of equipment and process control applications. This situation explains (but cannot justify) why the industry stuck on Windows® XP so many years long after Microsoft announced support cancelation for Windows® XP. On the other side, the temptation is high for equipment suppliers to cover partially the additional development costs with the sale of new equipment hardware instead of supporting software updates for existing equipment.

The description above is not intended to justify poor business practices but aims to explain the current situation.

IT infrastructure design: robustness vs. bad practices

There are multiple reasons to apply good IT infrastructure and network design practices for ensuring the reliability and the robustness of IT infrastructure and for improving information security; e.g.:

- Network segregation

- Office network, laboratory network(s), automation networks, administration networks, etc. - Resilient security concept ensuring a strong protection and confinement of each operation environment

- For instance, deploying internal firewalls between the laboratory networks or automation networks and the rest of the IT infrastructure.

Today, such design measures do not represent significant additional hardware and software costs. At first, the implementation of such measures requires available subject matter expertise as well as the elaboration of a robust IT infrastructure and IT security concept. In addition to a robust IT infrastructure design, good IT operation practices must be implemented, e.g.:

- Active network and operation monitoring

- Multiple monitoring solutions (open source as well as commercial) are available and make possible an active and reliable monitoring of network and IT infrastructure operation. There is no excuse today not to monitor actively IT operations, including active alarming in case of troubles.

- Monitoring does not only provide a current picture of the IT infrastructure components and networks, but it enables, based on historical data, the comparison of the current situation against previous configuration, and, based on an accurate configuration management, to identify possible deviations and their root causes resulting from updates or scope changes. - Reliable (and paranoid) data management strategy

-Virus and ransomware can easily jeopardize the integrity of backup data if those are not adequately protected. It is not acceptable to maintain a permanent on-line access to backup volumes. If users or systems have access to backup data, in case of contamination, a ransomware will be able to access to such backup data as well. At least, backup volumes must be disconnected after the backup has been performed. Better, a central backup application should be deployed for performing backup activities and the backup data should be stored in a dedicated part of the IT infrastructure with limited access (e.g. over a dedicated backup network only accessible by central backup servers). Virtualisation enables the implementation of robust backup concepts on hypervisor level with a very limited impact to the running virtual machines.

- Because of the criticality of today's situation, appropriate and defensive backup strategies must be elaborated and implemented as well as regularly verified, trained, and exercised. Generally, incremental backups must be limited to very short terms (less than 24 hours) purposes. Daily backups should be performed at least as differential backup. Full backups should be performed regularly in order to provide a reliable data baseline enabling a fast and reliable data reconstruction in case of a disaster. Finally, the critical question is: "how much data could I afford to lose?" Whose answer will define the RPO (Recovery Point Objective).

- Do not underestimate the restore time (RTO: Recovery Time Objective)! Even if it is possible to back up regularly very large data sets, the currently available technology requires time for restoring large data collection. Depending on the used backup strategy, data restoration could take several days (or weeks) even on high performance IT infrastructure. Restore activities must be rehearsed regularly and their performance must be controlled.

The above listed measures are neither exhaustive nor comprehensive. The design of the IT infrastructure and the definition of the IT operation processes must be developed based on accurately (and truly) identified risks.

Improvement proposal

What operating platform for industrial and process control applications?

Does Windows® represent a reliable operating system for process control purpose?

Despite of Windows® XP being definitively an old - even if reliable - operating system, it is interesting to look back at the last 24 months.

Since Windows® XP, Microsoft made multiple significant architecture and interface changes, limiting the backward compatibility of applications and jeopardizing the development investment of software editors, as well as initiating and stopping operating system platforms.

Additionally, Microsoft tries to enforce4 - with questionable ways - the adoption of the newest operating system release, jeopardizing the integrity of existing systems, overloading customer's IT infrastructures, and becoming really intrusive. At the same time, significant changes in the operating system update process caused multiple system crashes and increased dramatically the control effort for limiting and mastering update scope.

The result of such aggressive "enforcements" is that the system administrators were busier with rescuing daily IT operation and fighting against data privacy issues than with the implementation of prospective approaches for migrating applications to the next operating platforms.

With the "Patch Tuesday" on April 11th, 2017, a few weeks before the WannaCry attack, Microsoft confirmed5 that applying Windows® 7 security patches will not be possible anymore on systems using the most recent microprocessor architecture based on AMD Zen or Intel Kaby Lake microprocessors, although this operating system is supposed to be supported until April 2020. This behaviour has been already announced during summer 20166. It should be noticed at this point that there is no technical reason for such a limitation; this limitation achieves only a marketing objective: increasing Windows® 10 market share. If this strategy should be pursued by Microsoft in the future, the Windows® 7 based systems will become rapidly the "new XP systems", making vulnerable replacement systems based on recent microprocessor architectures.

The multiple architecture and strategy changes in Windows® make development work more and more difficult, increasing the costs and jeopardizing long term support for industrial and process control applications.

As an automation and system engineer, I never considered Microsoft Windows® as a really reliable industry platform. At first because of the poor transparency of this software platform, secondly because of the poor "real time" performance ("real time" means at least a deterministic temporal behaviour and an accurate and monotone time management), and thirdly because of the limited ability to plan and to achieve a long term development strategy.

Back to POSIX standard and to open (and real time) platforms

If a reasonable and reliable operating system alternative for long term purpose must be considered, the choice is both limited as well as very large:

- Limited because today the only alternative is represented by *nix based systems, in particular GNU/ Linux and its embedded version eLinux, and for hard real time application VxWorks or QNX.

- Large because multiple flavours of the Linux kernel are available for both operating system and user interfaces (so called "Desktop Environment").

The purpose here is not to advocate for a particular GNU/Linux distribution but to make the reader aware that a real world exists besides Microsoft Windows® and that such operating environments could secure investment and development costs because of their openness and long term reliability.

The best way to secure the portability of applications is to rely on widely accepted and supported standards. POSIX - Portable Operating System Interface - is one of such standards elaborated in the late 1980s and early 1990s for ensuring a "smooth" and reliable portability of a developed application between different operating systems and operating system versions.

Recommendation

Vienna, Austria7-9 May 2025

Computerised System Validation: The GAMP 5 Approach

Together with an appropriate development environment (including portable and open programming languages) and with clean and robust software development practices POSIX provides a stable and portable software development platform.

Even if the Linux kernel has been experiencing evolutions and some significant changes over the years, well developed software applications are still portable to the newest Linux platform with limited effort, securing long term support.

Platform independent application user interface

Two main different approaches could be basically followed for developing and implementing application user interfaces:

- Web-based user interfaces

- Multi-platform user interfaces based on application frameworks.

W3C conform web-based user interfaces

Since the last 20 years, web-based user interfaces have been efficiently replacing more and more so called "fat-client" applications, i.e. specifically developed applications for interacting with equipment or server applications.

For equipment suppliers, web-based user interfaces allow to avoid having to develop and to maintain, for each operating system type and version, fat client applications for interacting with equipment. Such an approach represents a real efficiency improvement in terms of long term and security support. However, such a design requires to implement accurately W3C specifications in order to provide a seamless support of W3C standards, enabling a neutral and consistent support of web browsers7, ensuring the highest compatibility level. It is in particular crucial not to focus on proprietary browser technologies, but to prefer to simply comply with non-proprietary standards.

Qt-based user interfaces - Portability by design

Initially launched in 1995 by Trolltech AS, a Norwegian software company, Qt is a widely used crossplatform application framework for developing multi-platform applications and graphical user interfaces (GUIs). Adopted by multiple companies (including global players) in various sectors - from automotive to medical devices, including consumer electronic and mobile devices - Qt represents a reliable and stable application platform for limiting development effort, ensuring application portability, and for long term securing development and investment. Qt is available with both commercial and open source GPL licenses. Qt supports natively various platforms such as embedded Linux, VxWorks, QNX as well as Linux, macOS®, and Windows® on desktop level and mobile platforms based on Android or iOS.

It is to notice that Qt can be equally used for developing web-based user interfaces based on HTML5, hybrid user interfaces as well as native user interfaces based on widgets.

Robust IT infrastructure design

The industry challenges consist in preserving business operation and capability.

- Today only very few firms could survive without an operational IT infrastructure.

- Likewise a significant data loss in case of a disaster usually impacts or even jeopardizes the firm's future. Less than 10% of the organisations survive a complete data disaster8.

Building a robust IT infrastructure is at first less a GxP requirement than a simple but strong and vital business requirement. The effort to secure the IT infrastructure represents an investment with a direct impact on the business capability of the organisation. The question is not "if" but "when" an attack will occur. Likewise it should be clear that IT security is like a war where we are only able to win a fight, but never the war definitively.

The above section already provides a couple of recommendations which must be supported by educated and well-trained system administrators. It is necessary to take a very defensive approach to design IT infrastructure and to deploy a reliable and accurate monitoring and operation approach. IT infrastructure reliability and security are not self-evident but the result of a systematic and defensive approach.

Even if, sometimes, the operational flexibility seems to decrease a little bit because of IT security measures, it should be clear that IT security cannot be negotiable (even for the senior management).

IT infrastructure robustness is only achievable by implementing a defensive design with a strong segregation of the multiple networks, with reliable and verified redundancies, with accurate operating processes. For instance, a dedicated and secured network has to be available for managing active IT infrastructure components and for connecting server console ports otherwise, in case of a security breach, it will be very easy (and efficient) for a cracker to modify the configuration of network and storage components causing an immediate and irrevocable data deletion.

The available time for evaluating security patches and for assessing changes depends directly on the degree of the IT infrastructure robustness.

Conclusion

The industry currently faces multiple and serious IT menaces9 being able to destroy companies and to jeopardize business capability. We have to recognize that this situation is the result of decisions taken years ago with insufficient care of - even deliberately ignoring - business capability and IT security. The technology, the design solutions, and the recommendation for building robust IT infrastructure and reliable process control systems have been available for more than 25 years (e.g. POSIX, VxWorks, QNX), including portable user interfaces (e.g. web-based, Qtbased).

The regulated industry and its equipment and solution suppliers must actively work together for supporting the design of better control systems for laboratory and manufacturing equipment as well as for process automation. The required expensive investment for new equipment does not fit anymore with the short term support (for only few years) on Windows® level10.

Nevertheless, it is urgent to initiate and to require explicitly such paradigm change since architecture changes will need a couple of years - three to five years - until manufacturing and infrastructure equipment and analytical systems designed on the basis of such more reliable software architecture will be finally available on the market.

Appropriate requirements should be defined and enforced by the regulated customers for long term support and for long term data availability and readability. These requirements must be (prospectively) taken into account by the equipment suppliers.

Within the scope of data integrity and of the required system upgrades, it would be meaningful to equally address requirements for long term system support.

Such strategy changes are really demanding in terms of training and design efforts for the suppliers: new development platforms, new development environments, probably the use of different programming languages. However, if the "Industry 4.0" should become an effective and efficient reality, such paradigm changes are unavoidable.

In 2016, the first significant troubles caused by inappropriate design and the implementation of "Internet of Things" devices were already noticeable on a global level.

Now, it is the time to reconsider the IT landscape and the design of industrial computerised equipment and to require and to enforce more reliable system architectures.

Postface

Since the first draft of this article in May 2017, new cyberattacks (see "Petya" resp. "NotPetya", "GoldenEye") occurred, impacting various organisations (e.g. UK NHS), including larger firms (e.g. Renault, Maersk, Saint-Gobain, TNT Express (FedEx)) and global acting pharmaceutical companies (e.g. MSD and Reckitt Benckiser). About five weeks after the initial "WannaCry" attack, larger manufacturing facilities (e.g. Honda) have been impacted through "WannaCry aftershocks" disabling their business capability.

Like "WannaCry", the recent malware and malworms use weaknesses of previous versions of Windows® operating systems and network services (e.g. SMB). The same remarks regarding the followed update strategies are made, still ignoring the complexity - and the related limitations - of industrial and real time systems.

These recent events should not be considered as being a particular bad "season" for cybersecurity. Furthermore the industry should become aware that such attacks will surely become part of the daily business.

It is clearly impossible to win such a cyberwar; only single fights could be won, never presuming a successful future.

Acknowledgements

The author would like to thank Robert (Bob) McDowall, Wolf Geldmacher, Philip (Phil) Burgess, Didier Gasser, Pascal Benoit, Philippe Lenglet, Ulrich-Andreas Opitz, Keith Williams, François Mocq, and Wolfgang Schumacher for helpful and valuable review comments made during the preparation of this article.

Author:

Yves Samson

… founder and director of the consultancy Kereon in Basle, Switzerland. He has over 25 years of experience in qualifying and validating GxP computer systems and IT infrastructure. He is also editor of the French GAMP®4 and GAMP®5. With the e-Compliance Requirements Initiative (ecri.kereon.ch) founded in March 2017 he wants to support the regulated pharmaceutical industry and suppliers in implementing and complying with the e-Compliance requirements.

Source:

1 In the meantime, in late June 2017, attacks based on (Not)Petya and GoldenEye inflicted sever damages to several organisations, including so called "global players".

2 See http://www.ubergizmo.com/2017/05/wannacry-victims-mostly-running-windows-7/

3 OLE: Object Linking and Embedding

4 See: Get Windows 10 "update"

5 https://support.microsoft.com/en-US/help/4012982/the-processor-is-not-supported-together-with-the-windows-version-that

6 In the meantime, the situation became more confusing, since this limitation seems to be reversed. Missing a clear official statement from Microsoft about this limitation, it is really difficult for the industry to elaborate reliable support plans.

7 Non-standard conform implementation based on Chrome or Edge would jeopardize the long term compatibility of web-based user interfaces. A strict validity with W3C standard, based on HTML5, would secure the durability of the user interface. Firefox and Vivaldi could be meaningfully used for performing the functional testing (OQ) of the user interfaces.

8 According to a study made by Touche Ross.

9 Even up-to-date antivirus software is not able to detect reliably ransomware.

10 See http://www.zdnet.com/article/windows-as-a-service-means-big-painful-changes-for-it-pros/